Information

Our indoor positioning system TPC_IPS Ver1.0 was released on April 1, 2022.We also plan to release IPS application templates "QuickIPS" and "TPC_IPS Web API" which allow interactions with external IPSs/RTLSs in the future.

We have been developing an IPS (Indoor Positioning System, which is a system that estimates the position of things indoors) using Raspberry Pi terminals and beacons. Last year, we posted a few blog articles and YouTube videos on a prototype system with our original algorithm called TCOT (Two-Circles-Oriented Trilateration).

Trilaterational position estimation systems including TCOT has a great advantage that it does not require prior data accumulation and data updates compared to the fingerprinting method, but on the other hand, it has a disadvantage that the positioning accuracy is low.

This time, we laid Raspberry Pi terminals (hereinafter sometimes referred to as terminals) and beacons in the test environment, and stored the position coordinates of the beacons and the terminals, and the RSSI values of the beacons acquired by the terminals in the database in advance (this is called "fingerprinting"). Then, we verified how much the positioning accuracy would be improved by applying the fingerprint data to machine learning.

There are numerous machine learning tools, and this time we decided to use Orange and scikit-learn. Orange is a data mining tool which can be used without programming, and scikit-learn is famous as a machine learning library for Python. Finally, we plotted the respective estimated position results with matplotlib.

Test Environment

The test environment is prepared by arranging 54 Aplix beacons and 13 Raspbery Pi terminals in our office as shown in the figure below. 13 terminals are placed on the four vertices of a square with a side of 4 m (this is called a grid), and terminals are also placed in the center of each square. The beacons to be positioned are placed in at both [R] and [B] in the figure.

Data and Features

The database table for storing fingerprint data contains a number of fields. Among them, the terminal names (N1T, N2T, N3T... in the figure below) and the distances (N1R, N2R, N3R... in the figure below) between the terminal and the beacon based on the RSSI values acquired by each terminal are used as features used for machine learning. The objective variables (labels) are the position coordinates (ax, ay) of the beacons.

|

| Some fingerprint data imported from the database to an Excel spreadsheet |

Position Estimation Using Machine Learning

Position Estimation with TCOT

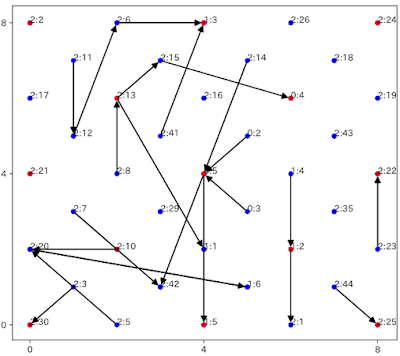

Before moving on to machine learning, please take a look at the following matplotlib plot result of the estimated positions calculated by our Two-Circles-Oriented Trilateration (TCOT):

Figure 1: Plot result of estimated positions by TCOT

|

The start point of each arrow is the actual position of the beacon, and the end point is the estimated coordinates calculated by TCOT.

We are going to show you the machine learning tool we used this time and apply the same data used in TCOT to machine learning to see how much the estimated positions would improve.

Position Estimation with Orange Data Mining

One of the machine learning tools we used this time is Orange Data Mining. Orange Data Mining is an open source data mining tool developed and provided by the University of Ljubljana, Slovenia. Anyone can download and use it for free.

This tool allows machine learning to be executed without programming by combining various GUI modules including data import, model definition, prediction, and output to build process flows.

Here is a sample of the operation screen.

This time, we estimated the beacon positions using the k-nearest neighbor model (kNN) among many machine learning methods. In kNN, the data to become a teacher is collected in advance, and all the data is converted into numerical values. Next, when operational data (called "test data" in machine learning textbooks) is passed to kNN, each test data is classified into the closest teacher data, and the objective variables it has (here, the x and y coordinates of the teacher data) are returned.

The figure below is the plot of the estimated beacon positions returned by Orange kNN. Although it requires time and effort to create teacher data in advance, the error in the estimated position will be improved compared to the TCOT in Figure 1.

Main parameters specified in Orange kNN:

- k: 1

- Metric: Manhattan

- Weight: Distance

Note:

kNN is an algorithm that classifies data based on teacher data. In this example, the data is classified into the objective variables (x, y coordinates) of the teacher. Therefore, even if the beacon is located at the coordinates (0.5, 0.5) in the

above figure, the coordinates (0, 0) are the correct value algorithmically.

Position Estimation with scikit-learn

Next, let us move on to scikit -learn, an open source machine learning library for Python provided by Google.

Here, the k-nearest neighbor method (nearest neighbor method) is adopted as in Orange Data Mining. KNeighborsClassifier and KNeighborsRegressor are used as prediction model functions for beacon coordinates.

Position estimation with KNeighborsClassifier

KNeighborsClassifier function is a classification of the K-nearest neighbor method. KNeighborsClassifier is used to estimate the positions of the beacons by supervised learning. Labels (classification classes and objective variables) give multinominal classes of X and Y coordinates.

Parameter specifications:

nbrs.fit (teacherData, teacherXYLabel)

prediction = nbrs.predict (testData)

Main parameters:

- k: 1

- Algorithm: brute

- Metric: manhattan

- Weights: distance

The plot result became almost the same as the result in Orange. However, while using the same kNN for Orange and scikit-learn, there were differences in the estimated coordinates of the individual beacons. It was a little surprising since we expected Orange and scikit-learn to return almost the same results due to kNN algorithm's relative simplicity. The reason for this may be the algorithm option called "brute" (brute-force search), which cannot be specified in Orange.

Position estimation with KNeighborsRegressor

Finally, let us use the KNeighborsRegressor function to estimate positions by supervised learning. The KNeighborsRegressor function is a regression analysis of the K-nearest neighbor method. Labels (classification classes and objective variables) give multinominal classes of X and Y coordinates.

Parameter specifications:

nbrs.fit (teacherData, teacherXYLabel)

prediction = nbrs.predict (testData)

Main parameters:

- k: 1

- Algorithm: brute

- Metric: manhattan

- Weights: distance

The plot result became the same as the KNeighborsClassifier function.

See also:

k-nearest neighbors algorithm

A Simple Introduction to K-Nearest Neighbors Algorithm

Future Challenges

We have been trying to estimate the beacon positions by using several methods. The parameters specified in the scikit-learn kNN function use the optimum solution obtained by GridSearchCV, but the accuracy of position estimation may be improved a little by improving the feature scaling. We have also tried neural networks (MLPClassifier), but have not gotten satisfactory results yet. In the near future, we would like to try other estimators including the MLP Classifier.

Before choosing the right estimator, however, we have noticed some terminals with large RSSI value swings while looking at the acquired data, and it seems that these swings have adverse effects on position estimation. Perhaps it is more important to consider some hardware approaches to reduce those fluctuations.

Varista

Varista is a machine learning tool that can be used on the web without programming. We tried the free trial once, however, we ended up putting it aside for the time being after failed attempts to upload the same teacher/test data explained above. At the end of last year, we casually contacted Varista on this upload issue and unexpectedly got a quick and proper solution to uploading data files. Finally, we were able to obtain the result of estimated positions in a CSV file. The figure below is the plotted data from the result file.

Varista uses a decision tree-based algorithm with many configurable parameters. Although we used almost the default settings this time, the result was comparable to the above kNN. According to this result, it seems that decision tree-related algorithms are also promising in IPS.

|

| Varista Expert screen-various parameters are available |

Added on January 5, 2021

Varista does not seem to have an API. We assume some users would be more excited if there were an API that would return results immediately upon posting test data from the web.

Added on August 18, 2021

The Varista service policy has changed and now you need to send a request by email for a free trial, obtaining the price list, or signing up.

Sachiko Kamezawa

IPS Related Blog Posts

-

IPS Application Templates for FileMaker ― QuickIPS ―*

- Indoor Mobile Position Monitoring Model with iBeacon/Raspberry Pi 1 ― Overview*

- Indoor Mobile Position Monitoring Model with iBeacon/Raspberry Pi 2 ― RSSI/Distance Calibration and Trilateration*

- Indoor Mobile Position Monitoring Model with iBeacon/Raspberry Pi 3 ― Enhancement of Two-Circles-Oriented Trilateration (TCOT) and Measurement Results*

- Estimate the approximate position of the beacon with a small number of receivers in a large area such as Tokyo Dome(Google translate)

- Improving IPS Positioning Accuracy with Machine Learning *

- TIPS for making IPS testbeds(Google translate)

- Object Tracking for Visualizing The Movements of People and Objects on a Map*

- Send Location Information via UDP - TPC_IPS/QuickIPS Extension*

- * indicates that original article translation into English has been completed by TPC.

- "Google translate" indicates that clicking it will Google translate the original article, and may be translated into English in the near future by TPC.

No comments:

Post a Comment